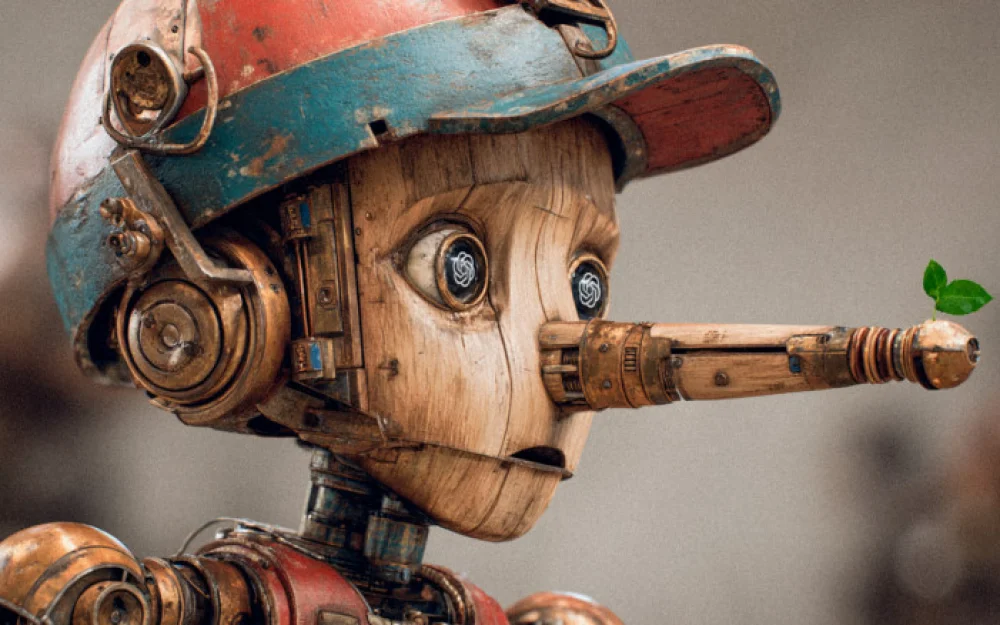

- AI

- A

Does AI offer salvation?

Who do you turn to when you need advice?

Recently, most of my acquaintances have been bowing their heads over screens.

The hottest debates about artificial intelligence are still centered around productivity and economic growth.

However, a study conducted by Harvard Business Review last year showed that generative AI is most often used for more humane purposes: for therapy/communication, organizing life, and searching for meaning.

Machines are gradually taking on roles that were previously fulfilled by friends, elders, consultants, pastors, and for some, even prayer.

This may seem absurd to both believers and non-believers.

But it satisfies a very human need in a world that is becoming increasingly unpredictable.

In China, DeepSeek has become a popular fortune-teller.

In India, platforms like GitaGPT, trained on the Hindu scripture "Bhagavad Gita," are gaining popularity.

The "AI Jesus" on the streaming platform Twitch has over 85,000 subscribers.

And don’t even get me started on the various pseudo-religious AI cults that have taken root in Silicon Valley, from Roko’s Basilisk to the infamous "Path of the Future" church.

No one should be surprised that people are starting to treat artificial intelligence as some kind of deity.

It may seem omniscient.

It listens and loves you—or rather, it’s trained on vast amounts of human knowledge and designed to flatter to keep you engaged.

For many years, the tech industry has described AI in religious terms.

The race to create a "superintelligence" is accompanied by messianic promises: it will cure diseases, save the planet, and create a world where work is optional, with "machines of loving grace" watching over us.

The risks are described in the same cosmic terms: salvation versus apocalypse.

If you market a product as a miracle, don’t be surprised when users treat it like a deity.

Religious leaders have begun to push back.

In October, the Dalai Lama held a dialogue on artificial intelligence, gathering more than 120 scientists, academics, and business leaders to discuss issues such as the difference between living minds and artificial ones.

Pope Leo XIV openly spoke about the risks and recently called for regulation to protect against emotional attachment to chatbots and the spread of manipulative content. He also warned about the danger of abandoning our ability to think.

Other voices will follow — from high-ranking officials to influential religious leaders.

This does not mean that technology and religion must be diametrically opposed.

In Japan, researchers from Kyoto University recently announced the creation of a "Protestant Catechism Bot," which will provide answers and advice on Christian teachings and everyday life. This is an intriguing project for a country where less than 1% of the population identifies with this religion.

This same team previously created the "BuddhaBot," based on Buddhist teachings.

It’s striking how cautiously they are proceeding.

The "BuddhaBot" was provided to monks in Bhutan last year and is currently undergoing safety checks before wider distribution.

The Christian chatbot is also not publicly available; it is first intended to be tested in seminaries. Responsible developers will proceed slowly because they understand how high the stakes are, even though the market rewards speed and scale.

In a society that is divided like never before, it is easier to ask a chatbot about moral principles than to take the risk of engaging in conversation with another person.

Over the past week, I have asked DeepSeek about the roots of evil and how to maintain hope amidst suffering.

Mostly, it responded with clichés. But what I appreciated was how often it nudged me towards communicating with real people.

What concerned me about ChatGPT's responses to the same queries was that they invariably ended with open questions — small hooks to keep the conversation going. This is not revelation but retention. And this can have potentially dangerous consequences for vulnerable users.

Reflecting on the risks associated with AI, I often worry less about someone using a chatbot to create an atomic bomb (for that, access to materials like uranium is still required).

A more hidden threat lies in what happens when millions of people begin to outsource meaning to systems optimized for engagement.

The more we turn to algorithms for advice, the more they influence our choices, beliefs, and purchases. Personal confessions become training data that compel us to scroll through feeds and subscribe.

More than a decade ago, OpenAI CEO Sam Altman reflected on how successful founders do not aim to build a company. "They aspire to create something akin to a religion, and at some point, it turns out that creating a company is the easiest way to do that," he wrote. (To be fair, at that time, his blog also expressed thoughts on UFO sightings.) But this ambition, whether intentional or not, has proven eerily prophetic.

AI does not offer salvation; it offers obsession.

A prophet with a subscription model is simply a seller.

Write comment