- Security

- A

SCA in the language of a security specialist

Recently, within the company, we have had several enthusiasts interested in DevSecOps for completely different reasons. Someone was asked an uncomfortable question by a customer, someone decided to embark on the path of self-development, and someone has a close person in AppSec and wants to be able to maintain a conversation about work.

In this article, I would like to explain in simple "information security" language the core of the secure development process for those who are encountering it for the first time or have heard something somewhere but cannot piece it together into a coherent picture. Because in reality, it is something very close to what you or your colleagues already know from your daily work, even if you have never worked in development and have not touched the source code.

Now you will be able to try to speak the same language with those customers who, in addition to wanting to build information security processes, are also full-fledged developers of their own software. Let's go!

What is SCA?

What does this abbreviation stand for and how is it adapted here? Originally, it is Software Composition Analysis, most often SCA is referred to as Composition Code Analysis. It is rarely called composite, which is not quite correct. There is also an adaptation from a domestic SCA vendor - component analysis. The latter, I think, most accurately reflects the essence.

What is the essence? First of all, SCA is a process just like, for example, asset management or incident investigation. It is not a one-time action, but constantly recurring checks aimed at improving the security level of a product or application.

In the course of such a process, the product is decomposed to determine dependencies, licenses, and vulnerabilities, as well as the possible compatibility of licenses (for example, if you have pulled in a dependency with GPL, now you supposedly need to open the source code of the product) of the used components. To simplify, the result of regular checks will be the highlighting of weak points both in the source code and in the OpenSource pulled into the project, and as a result, the owner gains knowledge about the coverage of components by checks: artifacts necessary for making further decisions on working with established risks.

Who is this for and why?

There are three reasons why the implementation of the SCA process is required in a company (of course, provided that the company is a software developer):

· Licensing – in some cases, it is necessary to determine whether it is possible to sell a self-developed product at all if it contains, for example, a "non-commercial use" component.

· Certification – the regulator sets requirements for the security of the source code, without checking these requirements, it cannot be said that your software can be trusted "at face value".

· Security – even if you do not sell your product and do not want to get a certificate, it will be, to put it mildly, unpleasant if your brainchild is hacked or if your application becomes the cause of hacking your users.

The application can be anything – whether it is mobile applications, serious ISZ products, or even games. Everything developed by you, the customer, or even your pet project – in fact, all this needs to be checked and timely responded to.

SCA as a process

Source code and binary builds

Let's start with what people work with when it comes to SCA. Here everything is simple – source code, binaries. What is written by a person (or with the help of Copilot) is subject to verification for the presence of vulnerable dependencies.

And in general, the result can be the dependency graph itself – to understand what percentage of closed and open code is in the product, how many vulnerable points will be in the future build. Some specialists immediately start from the attack surface – the researcher's or hacker's ability to hack the product and cause harm. To determine the strategy and first steps, you need to understand how to prioritize.

How to choose what to check?

In fact, there are several opinions here. Both in the SDL community and at various public speeches, the picture is not entirely clear. Rather, it turns out that there is some generally accepted action template and there are a number of factors due to which the strategy can be adjusted. But if the process is being implemented for the first time, it is better to start with the obvious and simple.

The source code dependencies may include OpenSource libraries and some proprietary ones. The latter are more difficult to check, instead of checking the code directly, you will have to rely on the vendor or vulnerability knowledge bases, essentially getting a "pig in a poke" without the ability to confirm that the found vulnerabilities are all that awaits in the vendor's closed code.

Therefore, open-source software is often given priority. There is also a second reason – an attacker will know about it and, if they want to hack your product, they will start with the known vulnerabilities of open libraries.

The second step of verification is most often called container builds.

If all these steps have already been completed and the process is established, you can afford to spend time checking the IDE software in which the development is done, checking the OS on which the product will be installed – there is a chance that your product may be very good and secure, but the operation of your game or system can be disrupted by hacking the OS itself.

Dependencies. Important nuances

Once the scope of work is defined, the collection of information about dependencies begins. All imports, all additional libraries are searched in the source code in various ways.

What are the ways? If you list them from the least automated to the most automated, they are:

· maximum manual work with an attempt to run the product on a "bare" system and search for dependency packages manually;

· manual search and checking against knowledge bases;

· use of OpenSource or vendor products.

Where can dependencies be searched?

· directly in the source code, for example, the "requirements.txt" file for Python;

· regexes for keywords like import, scope, etc.;

· project repositories – for the above;

· builds of finished products;

· containers and virtual machines with the finished product.

The main question is how to hook up all the dependencies? And how not to destroy your work with your own hands. For example, a useful tip from experts is to always lock the versions of libraries and their dependencies, as the result of the SCA analysis will lose its relevance if you do not manage updates and do not understand which versions of libraries are used in the product at a particular point in time.

It is worth noting here that the above process is very similar to the already familiar process of finding and managing vulnerabilities for security professionals, with the only difference being that here it is dependencies and libraries, and there it is software. Similarly, these processes will be similar in the future when we move on to mitigation and fixes.

Just as a vulnerability scanner checks files and their contents for names and versions of software on a server or workstation, an SCA analysis tool checks code and looks for names and versions of libraries. Where does this information go next?

SBOM and other BOMs

Let's get acquainted: SBOM (Software Bill of Materials) is a machine-readable format that lists libraries and their versions. Such a document can be passed on to researchers who will either search for known vulnerabilities for this software or test a specific version manually.

Dependency information is also here, so when a vulnerability is found in one of the components, you can go back one step and understand how exactly this component gets into the product.

Using such a document, you can build a presumed attack surface, understand where the weak points are in the final product.

There is also MLBOM for artificial intelligence models, but this is just for general development.

What else do we collect?

In addition to what we can find in open code, by looking at the results of the analysis from other researchers, we can test both open and closed code for the same purpose. Often, the results of static and dynamic code analysis go into a common vulnerability database of a specific product for further processing.

After the dependencies are installed and vulnerabilities are detected, they are sent to systems that automate the SCA process. Such systems allow you to configure the necessary risk tracking policies – freezes of updates and development, direct updates of components, and notifications of those responsible for the process and patching.

We found vulnerabilities. What's next?

Here is the same analogy with the vulnerability management process – everything is like in your usual work – you need to familiarize yourself with the risks and decide what to do next.

Whose responsibility is this?

Most often, such problems are solved by the developers themselves with the participation of colleagues from AppSec, who will help choose the right strategy.

How to properly eliminate the found vulnerabilities, prioritize them and conduct the initial triage – opinions differ, as well as at the stage of choosing the area of verification. They are united by the fact that experts rely on the types of dependencies found in the product.

Types of dependencies

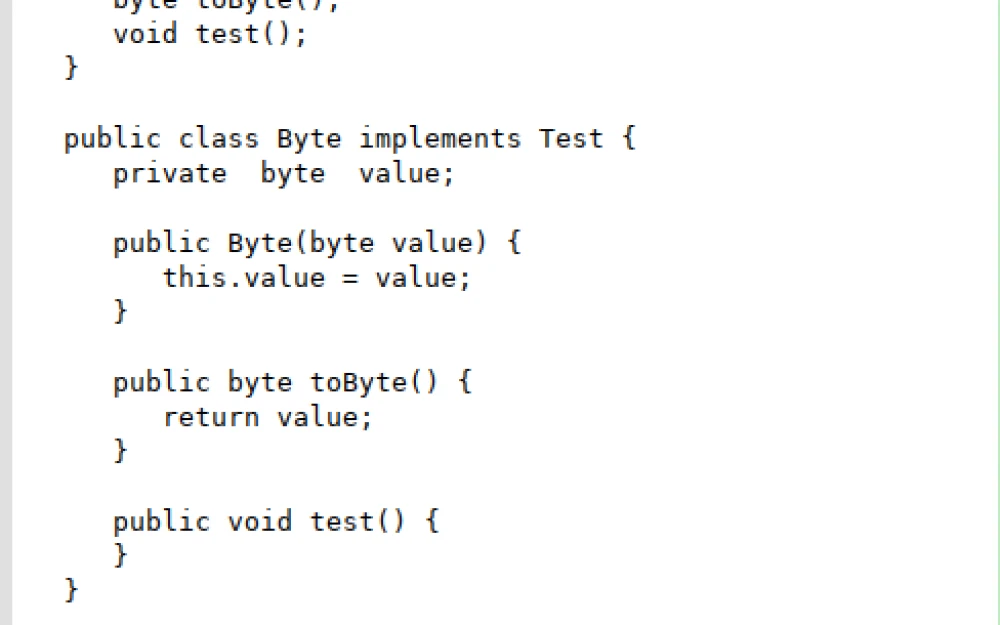

Dependencies can be direct and indirect (also called directive and transitive). What does this mean?

For example, you have python code that implements some API requests, and you decided to use the requests library. This is a direct dependency. It, in turn, pulls the urllib library as one of its dependencies – this is already a transitive dependency from the code's point of view.

There is a greater chance that vulnerabilities will be found in transitive dependencies. This may be due to the fact that larger projects contribute more and more often, they are also checked more often and, as a result, more vulnerabilities are found and fixed. Or in fact, just statistically: for one direct dependency, there are several transitive ones, so the probability of finding vulnerabilities is higher.

But transitive dependencies also more often come to the surface of the attack, so some experts recommend starting with them.

There is an opposite opinion, according to which you should start with direct dependencies, since the developer will have more opportunities to influence the elimination process.

Interception Plan

What opportunities does the developer have? The first thing to do is to confirm the relevance of the vulnerability for the product – we say that yes, someone will be able to carry out such an attack and everything will break. This is for direct dependencies.

For transitive ones, everything is a bit more complicated – we need to build a track – a chain of calls in our application. This is very similar to the kill chain of an attack and the route of the intruder from the incident investigation process. It happens that the tracks confirm only part of the indirect dependencies, and the amount of work to eliminate them is significantly reduced. However, when we talk about transitive dependencies, fixing them in your code can be difficult; for example, you created an issue, the found vulnerability was patched, but your directive-dependent library was not updated and works with the old vulnerable version – it is not possible to patch the entire chain. In this case, experts suggest taking a different path and working with calls to vulnerable methods in the code itself, validating the call and setting up a secure wrapper for data protection there.

There are several popular strategies for dealing with found vulnerabilities:

· Update – check the next version of the application or library with subsequent updates. It should be noted here that update policies can be completely different - some prefer to stay "a few versions" behind the latest one, because often the latest version seems safer only because something critical has not yet been discovered there. For some, the motivation is the continuity of the application – the new version may mean both the addition and removal of functionality that was used in the product. The strategy of "just update everything" is unfortunately not a panacea.

· Migration. It happens, for example, that you have pulled a library into your product with some component with many dependencies just for one function. Then you should weigh on the one hand the risks that the application is vulnerable, and on the other hand that you will have to spend time and resources on your own development or on replacing one library with another.

· Mitigation. You can protect the code in another way. Many companies, either not waiting for a patch from the library author, or not having the opportunity to switch to a new version, patch themselves and migrate this legacy from product to product. There are more convenient ways – for example, to wrap the product in an SZI, which will deal with all unwanted calls and protect sensitive data.

· Freeze everything. This applies to both updates and development. Most often, this strategy is used when it comes to a system-forming component of the system. There have been cases when products were not released to the market and postponed the implementation deadlines for customers until the vulnerability was eliminated in one way or another.

The most important

The main thing I would like to emphasize as a result for memorization is that SCA is a regular process. It should develop along with the product, increasing its level of security. As soon as the next step is passed, you can move on to the next one, not be afraid of updates and at first glance a frighteningly large dependency graph – everything is fixable.

It is important to be aware of what the application uses and what it consists of. Only through a properly implemented and structured secure development process, whose foundation is component analysis, can something truly secure be created.

Write comment