- AI

- A

Google introduced the AI video generator Veo 2.0: is it better than Sora and how to get access

Just a few days after OpenAI introduced Sora, Google released its newest and most advanced AI model for video generation - Veo 2.0. The new version of Veo includes several really cool new features, including 4K resolution, improved camera control, and much higher overall quality compared to the previous version.

The release time of Veo 2.0 makes everyone wonder: Is Veo 2.0 better than Sora?

If you are hearing about Veo for the first time, it is Google's AI video model capable of generating videos from text descriptions. The first version of Veo was introduced in May 2024 but never became publicly available. Now Google has introduced Veo 2.0 with significant improvements and expanded functionality.

What's new in Veo 2.0?

In Veo 2.0, Google has introduced three new features.

Increased realism and accuracy

Enhanced motion capabilities

Broader camera control capabilities

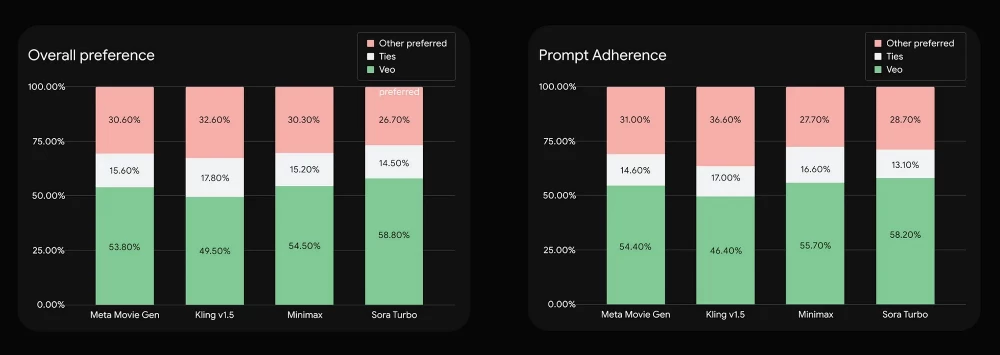

To demonstrate the capabilities of Veo 2.0, Google conducted a comparative evaluation with other leading video generation models such as Movie Gen from Meta, Kling v1.5, Minimax, and Sora Turbo.

Experts reviewed 1,003 video samples created using prompts from the MovieGenBench dataset. The videos were compared at 720p resolution with varying durations: Veo samples were 8 seconds long, VideoGen samples were 10 seconds long, and other models produced 5-second results.

As can be seen from the tables above, Veo 2 demonstrates the best results in overall preference and the ability to accurately follow prompts.

Of course, knowing Google's not-so-great track record when it comes to product announcements, you should take these metrics with a grain of salt. It's always important to try these AI video generators in action before making any conclusions.

User X Blaine Brown conducted a good experiment in which he asked various video models to create a video of a chef's hand slicing a steak. This is a very difficult task for AI models. Hands, the sequential physics of slicing and movement, the interpretation of "the steak is perfectly cooked," steam, juices, etc.

Here is the prompt and the final results:

Prompt: A pair of hands skillfully slicing a perfectly cooked steak on a wooden cutting board. faint steam rising from it.

From these results, it is clear that only Veo 2.0 was able to create a realistic video of meat slicing.

Key Features of Veo 2.0

Let's take a closer look at the new features, starting with increased realism and accuracy.

According to Google, Veo 2.0 is a huge step forward in detail, realism, and artifact reduction. The model is capable of generating videos with high-precision textures, natural movements, and cinematic quality compared to its predecessor.

Prompt: An extreme close-up shot focuses on the face of a female DJ, her beautiful, voluminous black curly hair framing her features as she becomes completely absorbed in the music. Her eyes are closed, lost in the rhythm, and a slight smile plays on her lips. The camera captures the subtle movements of her head as she nods and sways to the beat, her body instinctively responding to the music pulsating through her headphones and out into the crowd. The shallow depth of field blurs the background. She’s surrounded by vibrant neon colors. The close-up emphasizes her captivating presence and the power of music to transport and transcend.

Honestly, I am amazed at the quality of this video. At first glance, you would never guess that this video was generated by artificial intelligence. The skin texture is detailed, the head movements are smooth, and even the barely noticeable camera shake adds realism to the scene.

Realism is also evident in the textures and materials. Take, for example, the video with a transparent stone created by Veo 2.0.

AI accurately models how light reflects and refracts through a translucent surface. This is something that many video models still cannot achieve.

Now let's look at the advanced capabilities of Veo 2 in the field of motion. As reported in the Google blog, the new model surpasses everyone in understanding physics and the ability to follow detailed instructions.

Take a look at this example video where a person is slicing a tomato.

Prompt: A pair of hands skillfully slicing a ripe tomato on a wooden cutting board

The resulting video looks natural, the knife smoothly cuts through the tomato. The physics of motion - how the tomato slightly shifts when moving and how the knife moves - is conveyed with amazing accuracy.

This is how the same prompt is interpreted by Sora from OpenAI:

As you can see, Sora still struggles to represent real-world physics.

Here's another example:

Prompt: This medium shot, with a shallow depth of field, portrays a cute cartoon girl with wavy brown hair, sitting upright in a 1980s kitchen. Her hair is medium length and wavy. She has a small, slightly upturned nose, and small, rounded ears. She is very animated and excited as she talks to the camera.

Now 3D animation is under threat. Look at the object's hair. Each strand behaves just like in the real world, naturally responding to the character's movements.

Finally, Veo 2 has a new camera control feature that allows it to accurately interpret instructions and create a wide range of shooting styles, angles, movements, and combinations of all these.

Here's an interesting example shared by Jerrod Lew on X, where he showed how Veo handles a prompt to give the scene more cinematic quality.

Prompt: A video of a person sitting in a cafe with a coffee. After a bit, cuts to another viewpoint to reveal that a person nearby table is writing a letter to them.

Notice how the camera moves between scenes? This is incredibly useful if you want to generate multiple scenes with one prompt. This capability is not available in other AI video generators, even in Sora from OpenAI.

For directors, marketers, and content creators, these tools open up possibilities for creating more complex AI-generated plots. Instead of stitching together individual scenes, Veo can now create complex, multi-angle videos with a single prompt.

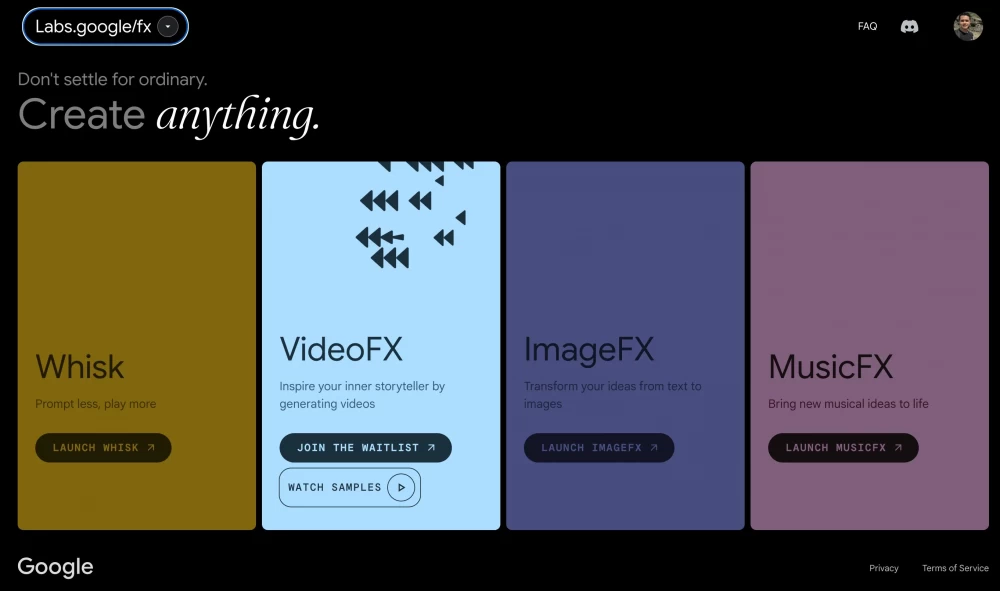

How to create videos with Veo 2.0

Go to Google Labs and select "VideoFx" from the list of available AI tools.

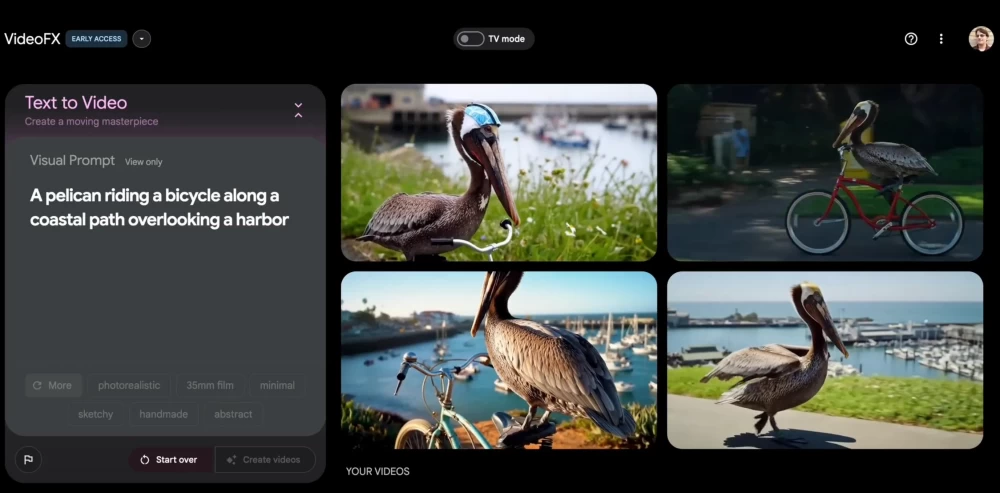

If you are one of the lucky ones who got early access to Veo 2.0 through VideoFx, a window will appear on the left to enter a description of the video you want to generate.

When you click the "Create videos" button, VideoFx will generate four options at once. You can repeat the generation to get more variations or download the video.

Some users have also noticed the "Text to Image to Video" feature, which allows you to create an image using Imagen 3 and turn it into a video using Veo 2.0.

What you need to know about Veo 2

Veo still "hallucinates" and sometimes creates unwanted details such as extra fingers or unexpected objects.

Videos generated in Veo 2 contain an invisible SynthID watermark that helps identify them as AI-generated, reducing the likelihood of misinformation and misattribution.

Veo can generate videos with 4 times higher resolution and more than 6 times longer duration than Sora.

Google or Deepmind have not disclosed any information about what data and where it was taken from for training Veo 2. Most likely, the data source is YouTube, owned by Google.

According to Google DeepMin Vice President of Products Elai Collins, despite the promising results, there is still work to be done.

«Veo can consistently follow prompts for a couple of minutes, but [cannot] follow complex prompts for a long time. Similarly, character consistency can be an issue. There is also room for improvement in creating complex details, fast and complex movements». - Eli Collins

How to create a video in Veo 2.0?

Google is gradually rolling it out through VideoFX, YouTube, and Vertex AI. You can join the waitlist by going to Video FX and clicking the "Join the waitlist" button.

Once you are granted access, you will receive an email notification. Unfortunately, it is unclear how long this will take and how Google selects users who will get access to Veo 2.0.

Honestly, I thought OpenAI would crush Google with their 12-day Christmas campaign, but the failed launch of Sora gave Google a great moment to draw attention with Veo 2.0. The level of realism here is simply impressive. The physics and action consistency are much higher, and the fact that it can generate 4K video up to a minute long is already a huge achievement.

I am very glad that Google released this model. I have been waiting for new options for several months, besides Kling and Runway. Such competition is exactly what we need. However, Google or DeepMind have not yet named the price. I really hope they won't act like OpenAI and charge $200 a month for maximum capabilities.

If I had a wish list for Google, it would be this: connect Veo to the Gemini subscription, add more creative settings such as changing aspect ratio, resolution, and video length, and add a commercial license. That would be perfect.

Friends, I would be glad if you subscribe to my Telegram channel about neural networks - I try to share only useful information.

Write comment