- AI

- A

Results of OpenAI o1, testing and notes on the new model

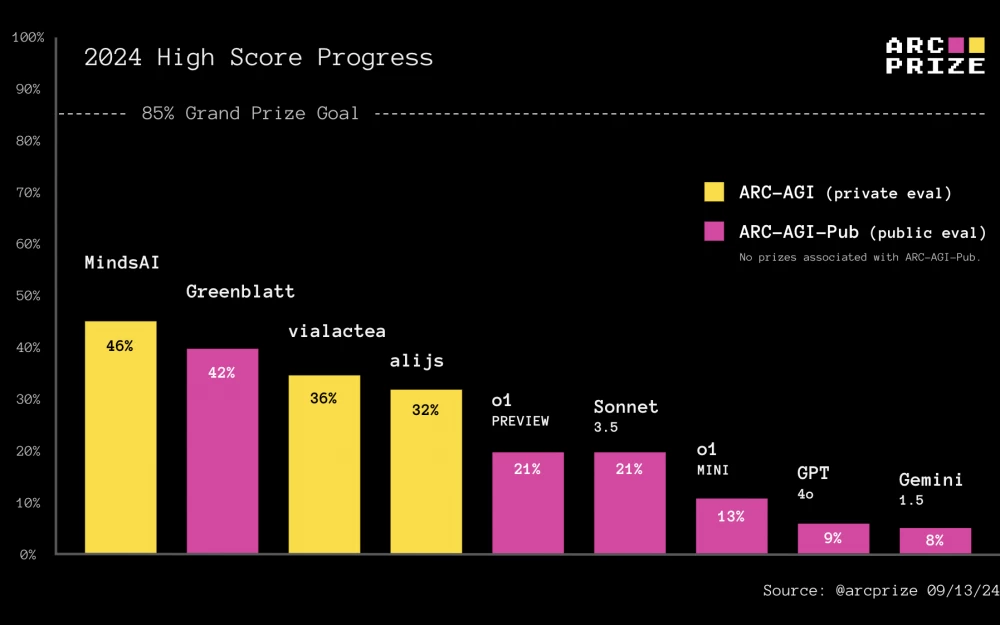

o1-previewIn the last 24 hours, we have gained access to the newly released OpenAI models, o1-mini specifically trained to emulate reasoning. These models are given additional time to generate and refine reasoning tokens before giving a final answer. Hundreds of people have asked how o1 looks on the ARC Prize. Therefore, we tested it using the same basic test system that we used to evaluate Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5. Here are the results:

Is o1 a new paradigm in relation to AGI? Will it scale? What explains the huge difference between o1's performance on IOI, AIME, and many other impressive test results compared to only modest results on ARC-AGI?

We have a lot to talk about.

Chain of Thought

o1 fully implements the Chain of Thought (CoT) paradigm "let's think step by step," applying it both during training and during testing.

In practice, o1 makes significantly fewer mistakes when performing tasks whose sequence of intermediate steps is well represented in synthetic CoT training data.

OpenAI states that during training, they created a new reinforcement learning (RL) algorithm and a highly efficient data processing pipeline using CoT.

It is implied that the fundamental source of training o1 is still a fixed set of pre-training data. But OpenAI can also generate tons of synthetic CoTs that emulate human thinking for further training the model using RL. The unresolved question is how OpenAI chooses which generated CoTs to train on?

Although we have few details, the reward signals for reinforcement learning were likely achieved through verification (in formal areas such as mathematics and coding) and human labeling (in informal areas such as task breakdown and planning).

During inference, OpenAI says they use RL to allow o1 to refine its CoT and improve the strategies it uses. We can assume that the reward signal here is some actor + critic system similar to those OpenAI has published before. And that they apply search or return to the generated reasoning tokens during inference.

Test-time computations

The most important aspect of o1 is that it demonstrates a working example of applying CoT reasoning search to informal language rather than formal languages such as mathematics, coding, or Lean.

While the added scaling of training time using CoT is noteworthy, the big novelty is the scaling of test time.

We believe that iterative CoT truly unlocks greater generalization. Automatic iterative prompting allows the model to better adapt to novelty, similar to fine-tuning during testing used by the MindsAI team.

If we make only one inference, we are limited to reapplying memorized programs. But by generating intermediate CoT outputs, or programs, for each task, we open up the possibility of composing components of learned programs, achieving adaptation. This technique is one way to overcome the number one problem of large language model generalization: the ability to adapt to novelty. Although, like fine-tuning during testing, it ultimately remains limited.

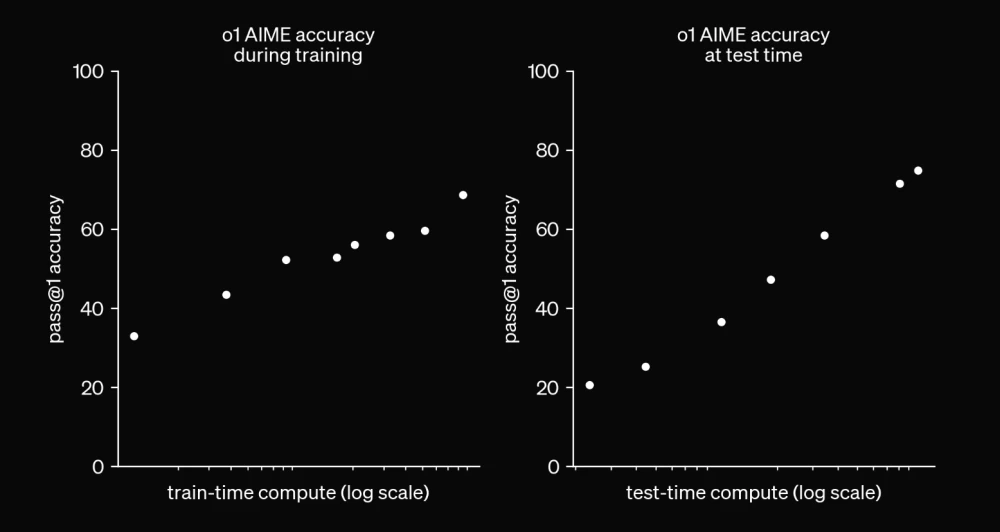

When AI systems are allowed a variable amount of computation during the test (e.g., the number of reasoning tokens or search time), there is no objective way to report a single benchmark score because it correlates with the allowed computation. This is what this chart shows.

More computation — more accuracy.

When OpenAI released o1, they could have allowed developers to specify the amount of computation or time allotted for refining CoT during testing. Instead, they "hard-coded" a point along the computation continuum during testing and hid this implementation detail from developers.

With changing computational capabilities during testing, we can no longer simply compare output between two different AI systems to assess relative intelligence. We also need to compare computational efficiency.

Although OpenAI did not share efficiency figures in its statement, it is exciting that we are entering a period where efficiency will be in the spotlight. Efficiency is critical to defining AGI, which is why the ARC Prize sets an efficiency threshold for winning solutions.

Our forecast: in the future, we will see many more test charts comparing accuracy and test execution time.

ARC-AGI-Pub model baselines

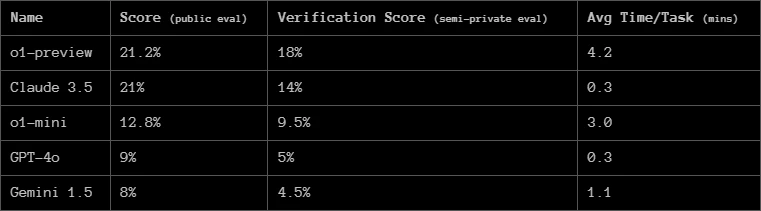

OpenAI o1-preview and o1-mini both outperform the GPT-4o public ARC-AGI evaluation dataset. o1-preview In terms of accuracy, it is roughly equivalent to Claude 3.5 Sonnet from Anthropic, but it takes about 10 times longer to achieve results similar to Sonnet.

To get model baseline scores on the ARC-AGI-Pub leaderboard, we use the same baseline query we used to test GPT-4o. When we test and report results on clean models like o1, we intend to get a baseline model performance measurement as much as possible without imposing any optimization.

In the future, others may find more efficient ways to create CoT-style models, and we will be happy to add them to the leaderboard if they are verified.

The increase in o1 performance was accompanied by time costs. It took 70 hours for 400 public tasks compared to 30 minutes for GPT-4o and Claude 3.5 Sonnet.

You can use our open-source Kaggle notebook as a basic test harness or starting point for your own approach. Submitting SOTA to the public leaderboard is the result of smart methods in addition to advanced models.

You might be able to figure out how to use o1 as a foundational component to achieve a higher score in a similar way!

Is there AGI here?

This diagram shows OpenAI's logarithmic linear relationship between accuracy and computation testing time on AIME. In other words, with exponential growth in computation, accuracy grows linearly.

Many are asking a new question: how scalable can this be?

The only conceptual limitation of the approach is the solvability of the problem posed to the AI. As long as the search process has an external verifier that contains the answer, you will see that accuracy increases logarithmically with computation.

In fact, the presented results are extremely similar to one of the best ARC Prize approaches by Ryan Greenblatt. He achieved a score of 43% by generating GPT-4o k=2048 solution programs for each task and deterministically verifying them using task demonstrations.

He then evaluated how accuracy changes with different values of k.

Ryan discovered an identical logarithmic-linear relationship between accuracy and computation testing time on ARC-AGI.

Does all this mean that AGI is already here if we just scale up computations during testing? Not quite.

You can see similar exponential scaling curves by looking at any brute force search, which is O(x^n). In fact, we know that at least 50% of ARC-AGI can be solved by brute force and zero AI.

To surpass ARC-AGI in this way, you would need to generate more than 100 million solution programs for each task. Practicality alone excludes O(x^n) search for scalable AI systems.

Moreover, we know that humans do not handle ARC tasks this way. Humans do not generate thousands of potential solutions; instead, we use the perception network in our brain to "see" a few potential solutions and deterministically test them using System 2 style thinking.

We can get smarter.

New ideas are needed

Intelligence can be measured by looking at how well a system transforms information into action in the situation space. This is the conversion rate, and therefore it is approaching the limit. Once you have perfect intelligence, the only way to progress is to gather new information.

There are several ways in which a less intelligent system can appear more intelligent without actually being more intelligent.

One way is a system that simply remembers the best action. Such a system would be very fragile, appearing intelligent in one area but easily falling in another.

Another way is trial and error. The system may seem intelligent if it eventually gives the correct answer, but not if it takes 100 attempts to do so.

It is to be expected that future research on computations during testing will explore how to more effectively scale search and refinement, possibly using deep learning to manage the search process.

However, we do not believe that this alone explains the large gap between o1 results on ARC-AGI and other objectively difficult tests such as IOI or AIME.

A more convincing way to explain this is that o1 still operates mainly within the distribution of its pre-training data, but now includes all newly created synthetic CoTs.

Additional synthetic CoT data enhances the focus on the CoT distribution, not just the answer distribution (more computation is spent on how to get the answer, not what the answer is). We expect that systems like o1 will perform better on tests involving the reuse of known emulated reasoning patterns (programs), but will still struggle with problems requiring the synthesis of entirely new reasoning on the fly.

Test refinement on CoT can only fix reasoning errors for now. This also explains why o1 is so impressive in certain areas. Test refinement on CoT gets an additional boost when the base model is pre-trained in a similar way.

None of the approaches alone will give you a big leap.

In summary, it can be said that o1 represents a paradigm shift from "remembering answers" to "remembering reasoning", but it is not a departure from the broader paradigm of curve fitting to distribution in order to improve performance by placing everything within the distribution.

Do you have any ideas on how to advance these new ideas further? What about CoT with multimodality, CoT with code generation, or combining program search with CoT?

Reminder that everyone can follow the news in the world of AI on my channel

Write comment