- AI

- A

"This is the robot we've all been waiting for – like C3PO": why don't we have humanoid robots in our homes yet?

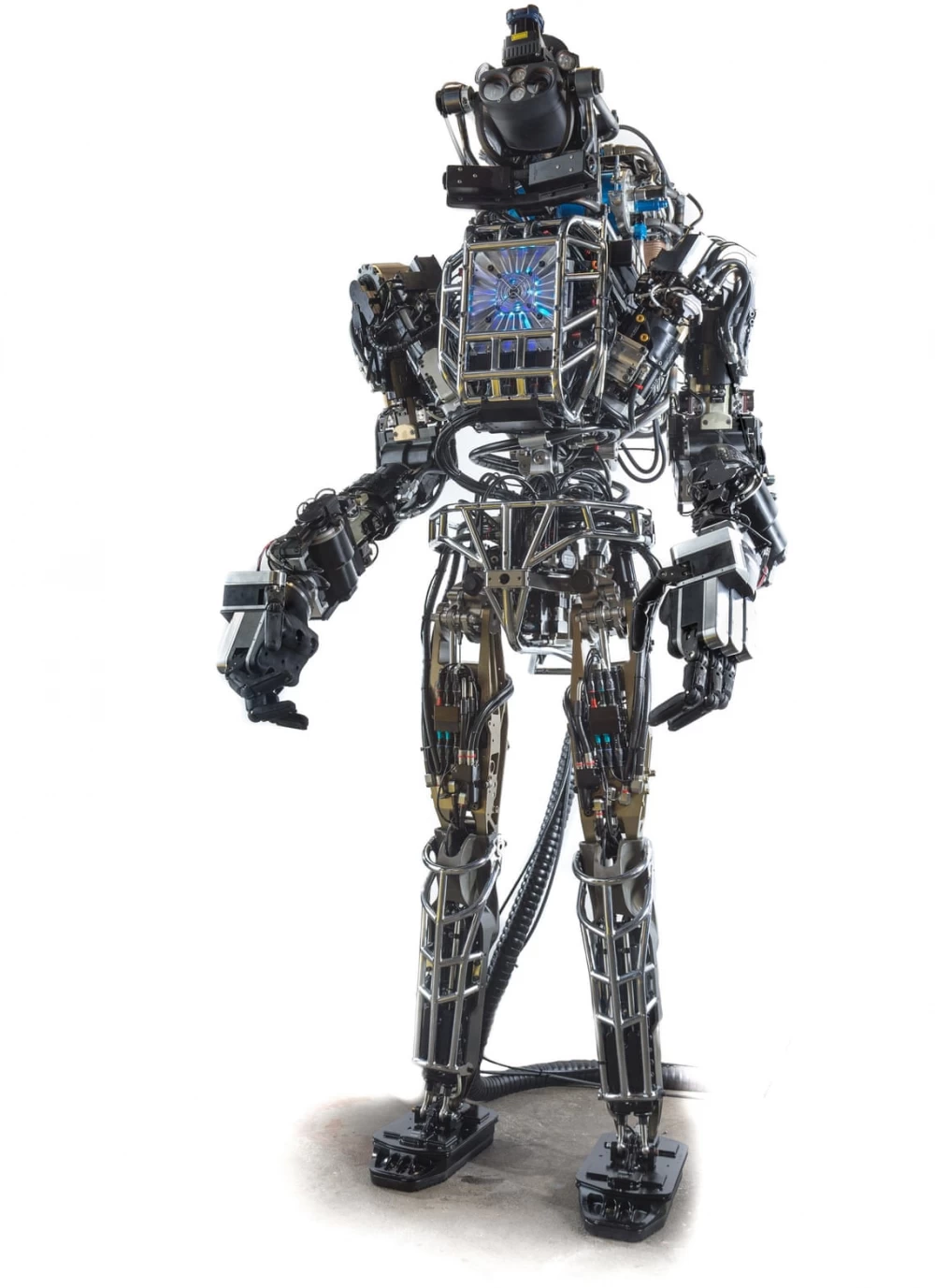

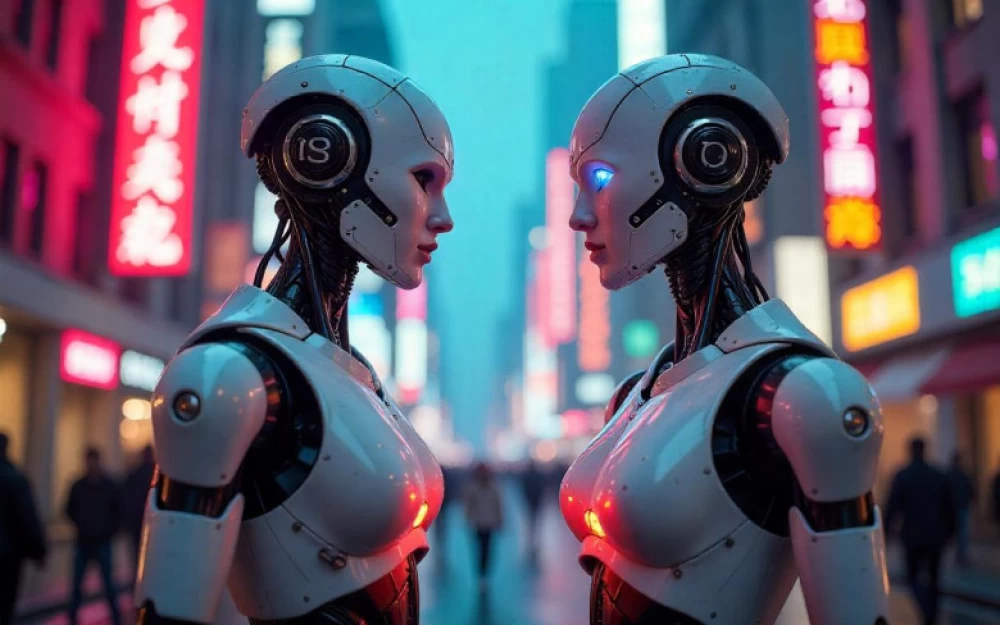

In 2013, the American robotics company Boston Dynamics introduced its new robot Atlas. Presented at the Darpa Robotics Challenge, the 188 cm tall humanoid could walk on uneven surfaces, jump off boxes, and even climb stairs. It was reminiscent of what we often see in science fiction: a robot designed to work like us and capable of performing all sorts of everyday tasks. It felt like the dawn of a new era. Robots were supposed to do all our boring and heavy housework and even become helpers for the elderly.

Tesla and other companies are trying to equip robots with artificial intelligence, but their development faces technical and safety issues. But the dream of a multipurpose home droid continues to live on

Since then, we have witnessed a leap in the development of artificial intelligence (AI), from computer vision to machine learning. The recent wave of creating large language models and generative AI systems opens up new possibilities for human-computer interaction. But outside research labs, physical robots remain mostly in factories and warehouses, performing very specific tasks, often behind a protective cage. Home robots are limited to vacuum cleaners and lawnmowers — not quite Rosie the robot.

“Robotic bodies have not seen significant development since the 1950s,” says Jenny Reed, director of the robotics program at the Advanced Research and Invention Agency (Aria), a government research and development body established in the UK last year. “I’m not saying there haven’t been any achievements, but when you look at what has happened with computing and software, it’s striking how little has been done in this area.”

Developing a robot simply requires more resources, says Nathan Lepora, professor of robotics and AI at the University of Bristol. A talented person with a computer can write an algorithm, but creating a robot requires access to a physical device. “It’s much slower and much more complicated,” he says. “This is the reason for the lag in robotics compared to AI.”

Research laboratories and companies hope to fill this gap: a whole range of new humanoid robots are under development, and some are already entering the market. In April, Boston Dynamics discontinued its original hydraulic Atlas model and introduced a new, electric version, which it intends to bring to market in the next few years and will begin testing at Hyundai factories next year. Agility Robotics from Oregon claims that its Digit robot is the first humanoid to be paid for moving boxes in a logistics center. Elon Musk claims that the Tesla humanoid robot, known as Optimus or Tesla Bot, will start working at car factories next year.

But it is still far from the appearance of robots working outside a tightly controlled environment. Advances in artificial intelligence with current equipment are capable of little, says Reed, and for many tasks, the physical capabilities of the robot are crucial. Generative AI systems can write poems or create paintings, but they cannot perform the dirty and dangerous work that we most want to automate. They need more than a brain in a box.

The development of a useful robot often starts with the hands. "Many use cases for robots depend on the ability to handle objects accurately and skillfully without damaging them," says Reed. Humans are very good at this. We can instinctively switch from lifting a dumbbell to working with an eggshell or from chopping carrots to stirring a sauce. We also have excellent tactile perception, as evidenced by our ability to read Braille. Compared to this, robots struggle. Reed's Aria program, which received £57 million in funding, aims to address this issue.

According to Rich Walker, director of the London-based company Shadow Robot, one of the problems with robot dexterity is scale. In the company's office in Camden, he demonstrates the Shadow Dexterous Hand robot. It is the size of a human hand, with four fingers and a thumb, with joints that mimic knuckles. But while the fingers look delicate, the hand is attached to a robotic arm that is much wider than a human forearm and full of electronics, cables, actuators, and everything else needed to control the hand. "It's a packaging problem," says Walker.

The advantage of a human-scale hand is that it is the right size and shape to work with human tools. Walker gives the example of a laboratory pipette, which he modified with Sugru, a molding adhesive, to make it more ergonomic. You can attach the pipette tool directly to the robot's hand, but then it can only use the pipette, not, say, scissors or a screwdriver.

But a fully humanoid hand is not suitable for all tasks. The latest Shadow Robot hand, DEX-EE, looks quite alien. It has three fingers, more like large ones than fingers, which are noticeably larger than human ones and covered with tactile sensors. The company developed it in collaboration with Google DeepMind, Alphabet's artificial intelligence research lab, which wanted to create a robotic hand capable of learning to pick up objects through repeated attempts to do so—a trial-and-error method known as reinforcement learning. But there were problems with this: robot hands are usually designed specifically to avoid bumping into objects, and if this happens, they can break. Murilo Martins, a DeepMind research engineer, says that when he conducted experiments with the original Dexterous Hand, "every half hour I tore a tendon."

In DEX-EE, durability comes to the fore: a video shows how three fingers successfully extend and flex during hammer blows. Its larger size allows for larger pulleys, which create less stress on the wire tendons, meaning it can reliably operate for at least 300 hours.

Despite this, says Maria Bauza, a research scientist at DeepMind, the time spent with the robot is very valuable. Last week, DeepMind published a study describing a new training method it called DemoStart. It uses the same trial-and-error approach, but initially uses a simulator instead of a real robot hand. After training the simulated hand to perform tasks such as screwing nuts and bolts, the researchers transferred this learned behavior to the real DEX-EE hand. "The hands are still going through thousands and thousands of experiments," says Bauza. "We just don't make them start from scratch."

This reduces the time and cost of experiments, making it easier to train robots that can adapt to different tasks. However, skills are not always transferred perfectly: while the simulated DeepMind robot hand could insert a plug into an outlet 99.6% of the time, the real hand managed it only 64% of the time.

This work is an example of how advances in artificial intelligence and robot bodies go hand in hand. Only through physical interaction can robots truly understand their environment. After all, notes Reed, the large language models behind text generators like ChatGPT were trained on a huge amount of human speech found on the Internet, "but where do you get data on what it's like to pick strawberries or make a sandwich?"

As the DeepMind robotics team writes: "A large language model can tell you how to tighten a bolt or tie shoelaces, but even if it is embodied in a robot, it cannot perform these tasks itself."

Martins goes even further. He believes that robotics is crucial for achieving artificial general intelligence (AGI) — a general-purpose intelligence equivalent to human intelligence, which many AI researchers dream of. He believes that AI will be able to truly understand our world only if it has a physical form. "For me, AGI does not exist without embodiment, just as human intelligence does not exist without our own body," he says.

Hands, although important, are just one part of the body. While Shadow Robot and other companies focus on fingers, an increasing number of companies and laboratories are developing full-fledged humanoids.

The appeal of humanoids may be partly psychological. "This is the robot we've all been waiting for — like C3PO," says Walker. But there is also logic in using the human form as a muse. "We have designed all our environments around humans," says Jonathan Hurst, co-founder and chief roboticist of Agility Robotics. "Therefore, the human form factor is a very good way to move, manipulate, and coexist with people."

But a humanoid may not be the best option for any job. A wheeled robot can go anywhere a wheelchair user can go, and when it comes to rough terrain, four legs can be better than two. Boston Dynamics' dog-like Spot can move over uneven surfaces or stairs and get back on its feet if it falls — something bipedal robots struggle with. "If a humanoid robot has a shape similar to a human, it doesn't mean it has to move exactly like that and be constrained by our joint limitations," adds a Boston Dynamics representative via email.

Humanoids are still getting the hang of it. According to Lepora from the University of Bristol, flashy videos and sleek designs can give people an unrealistic idea of how capable or reliable they are. Boston Dynamics videos are impressive, but the company is also known for its videos showing its robots' failures. In January, Musk posted a video of Optimus folding a shirt, but keen-eyed viewers noticed signs that the robot was being remotely controlled.

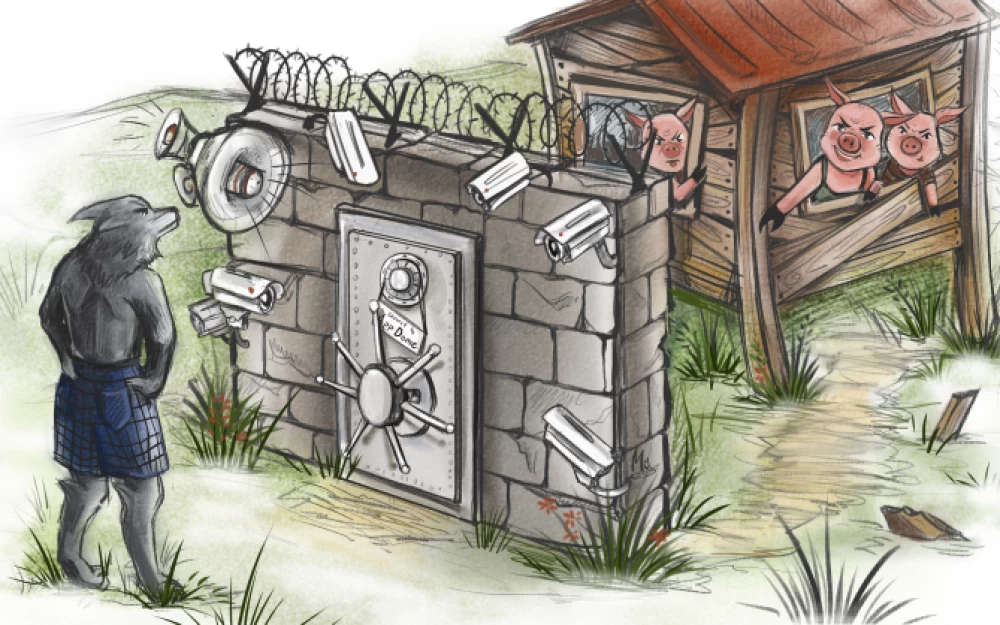

The main problem when bringing robots out of laboratories and industrial premises into homes and public places is safety. In June, the Institute of Electrical and Electronics Engineers (IEEE) created a research group to study standards specifically designed for humanoid robots. Aaron Prather, the chairman of the group, explains that a humanoid in a common space is a completely different matter than an industrial robot enclosed in a protective cage. "It's one thing when they interact with colleagues at Amazon or at the Ford factory, because a trained worker works with the robot," he says. "But if I take this robot to a public park, how will it interact with children? How will it interact with people who don't understand what's going on?"

The next step, Hurst sees robots in the retail sector: they will be removing goods from shelves or working in back rooms. Prather believes that we will soon see robot waiters. However, for many applications, the use of robots may be financially impractical. Walker gives the example of a delivery robot. "It has to be economically efficient [compared to] a minimum wage person working on a zero-hour contract on an electric scooter," he says.

Most roboticists I have spoken to say that a multipurpose home robot — one that can wash dishes, do laundry, and walk the dog — is still a long way off. "The era of useful humanoids has arrived, but the path to creating a truly versatile humanoid robot will be long and difficult and will take many years," say Boston Dynamics. Nanny robots, often touted as a solution to the aging population problem, will be a particularly challenging prospect, Reed believes. "Let's get to the point where a robot can reliably disassemble a laptop or make you a sandwich, and then think about how it can care for an elderly person," she says. That's if we even want robots to be involved in caregiving at all. As in art and poetry, perhaps some roles are still better performed by humans.

Write comment