- AI

- A

Missionary Culture Anthropic

The most ideologized company in Silicon Valley may have become the most commercially dangerous.

This week, a $300 billion sell-off in software and financial services was apparently triggered by Anthropic PBC and a new legal product released by the artificial intelligence startup.

No matter how senseless it may seem to attribute market changes to a single trigger, concerns over Anthropic's operational failures draw attention to its seemingly insurmountable performance.

The company, which employs around 2,000 people, claims to have released over 30 products and features just in January.

On Thursday, it continued to ramp up its pace by introducing Claude Opus 4.6—a new model designed for solving intelligent tasks, which will almost certainly compete with traditional companies providing software as a service (SaaS), such as Salesforce and ServiceNow.

OpenAI, which owns ChatGPT, has twice as many employees as Anthropic, while Microsoft Corp. and Google, owned by Alphabet Inc., employ 228,000 and 183,000 people, respectively. Moreover, these companies possess vast capital and extensive distribution networks.

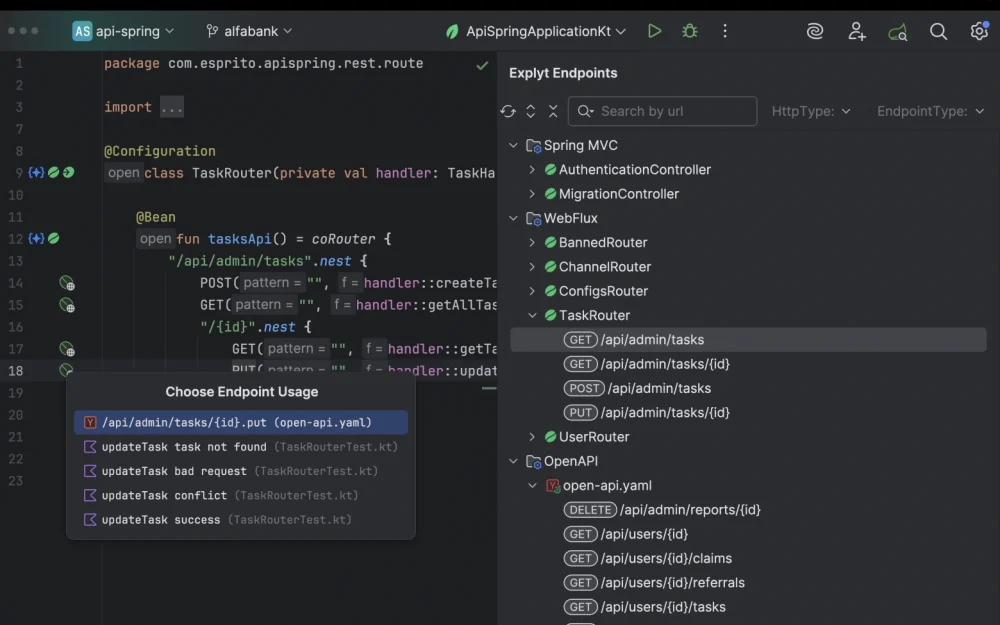

However, Anthropic’s tools for generating computer code and managing computers surpass anything that these large companies have managed to launch. OpenAI and Microsoft have recently struggled to release products that could have the same impact.

The relentless efficiency of Anthropic is partly explained by a paradoxical factor: an obsession with mission.

The company was founded by former OpenAI employees who believed that OpenAI was too dismissive of safety, especially concerning the existential risks that artificial intelligence poses to humanity.

At Anthropic, this issue has turned into a kind of ideology, with CEO Dario Amodei becoming its high priest and visionary.

Twice a month, Amodei gathers his staff for the Dario Vision Quest, or DVQ, where the CEO, wearing glasses, elaborates on creating reliable artificial intelligence systems that align with human values, as well as broader issues such as geopolitics and the impact of Anthropic's technologies on the labor market.

Last May, Amodei warned that the development of artificial intelligence could lead to the disappearance of up to 50% of entry-level office jobs in the next 1–5 years. It seems that his own company is poised to facilitate this with its almost religious zeal for safe AI. Job security does not appear to be a priority for Anthropic.

But people close to the company describe the atmosphere as cult-like: employees are united in their mission and believe in Amodei. “Ask anyone why they are here,” one of the company’s leading engineers, Boris Chernoy, recently told me. “Pull them aside, and they will tell you that their goal is to make artificial intelligence safe. We exist to make artificial intelligence safe.”

When last year Meta Platforms Inc. announced a major hiring spree for senior artificial intelligence researchers, it tried to persuade Anthropic employees that Meta would abandon the creation of open-source AI systems that could be used and modified for free. Anthropic employees found such an approach dangerous.

Earlier this month, Amodei published a 20,000-word essay on the looming threat to civilization posed by artificial intelligence, and the company presented an extensive “constitution” for its flagship Claude system, suggesting that its AI might possess some semblance of consciousness or moral status.

This is not only a precautionary measure but also a philosophical musing, as the constitution designed to guide Claude gives the system clearer instructions on how to respond to the possibility of shutdown—a scenario that the system might perceive as death.

With constant attention to safety issues, the company’s models are among the most honest on the market.

This means that they are less likely to produce false results and more likely to acknowledge what they do not know, according to a ranking compiled by researchers from Scale.ai, which is supported by Meta.

This, in turn, increases trust in the company among corporate clients, the number of which is growing.

This obsession is also unusual for an industry prone to mission drift, where tech companies are founded on noble ideas of improving humanity's life—until they are constrained by commitments to investors.

Remember Google's motto "Don't be evil"? Another example is the non-profit organization OpenAI, founded with the aim of "benefiting humanity" and not limited by financial constraints.

But Anthropic's mission-oriented culture has the added advantage of eliminating internal contradictions that typically slow down work in corporations like Google and Microsoft, where employees collaborate to achieve the company's goals.

As a result, the security-obsessed Amodei, resembling a mad scientist, releases more products than some of the largest companies in Silicon Valley.

"Military historians often argue that the sense of fighting for a noble cause drives armies to act more effectively," says Sebastian Mallaby, author of an upcoming book on Google DeepMind and a senior fellow for international economics at the Council on Foreign Relations.

According to him, Anthropic's advantage also lies in the company's focus on creating an extremely effective coding tool known as Claude Code, which has earned the trust of corporate clients, unlike OpenAI, which has developed several directions. Sam Altman's company suffers from "the arrogance of leadership," he adds.

Currently, Anthropic is attracting $10 billion at a valuation of $350 billion, meaning that the tendency to prioritize growth over safety will only intensify.

Amodei has created a corporate culture that works.

The question is whether this culture can withstand when the stakes rise, and the company continues to shake markets—and possibly many jobs.

Write comment