- AI

- A

Why and how neural networks are taught to be humane

We used to expect that machines would replace us in simple mechanical work, but areas such as art would always remain with humans. Now this statement can already be disputed. However, there is a suggestion that since AI is not capable of experiencing emotions and building interpersonal relationships, "flexible" and "soft" skills will become increasingly significant and highly paid, and teachers and healthcare workers will gradually earn much more than lawyers and financiers.

However, it is difficult to predict in which directions AI will replace humans. Machines are being taught emotions and humanity in general, and quite successfully. The market for emotion recognition through AI is already estimated at about $20 billion. So how are machines taught to understand and express emotions, will it be useful or harmful to us? Let's figure it out.

How to use empathetic AI

Affective computing or emotional computing, also known as emotional AI, is a rapidly developing field. Companies working in it strive to create artificial intelligence that not only performs tasks but also understands the deep meaning of communication with people, can read their emotions. Potentially, the application of AI capable of recognizing emotions is seen in many areas.

Security. Recognizing people's moods in a crowd or at important sites can notify local authorities if someone is behaving suspiciously or experiencing too much stress. Crime prediction systems in the US have been in development for more than 10 years, and several such technologies with AI have already been introduced by IBM, and adding mood recognition to the set of features can improve these systems.

Another example from the field of personal safety is AI with emotion recognition built into cars. It can determine if the driver is in an unstable state and take measures accordingly.

Communication with the audience. They suggest, for example, the appearance of a device like glasses Microsoft Hololens with AI that recognizes the emotions of each person in the hall. Then, during the performance, you can get a hint from the computer on how the audience perceives what is being said on average, and, if necessary, change your behavior or words.

Such technologies can be actively used in education. Methods for recognizing dynamic facial expressions can help determine or even predict the engagement and emotional state of students using a neural network.

Entertainment. Emotional AI can make the plot or other game parameters adapt to the player's emotions. There is especially a lot of room for action in the field of virtual reality.

Service and mental health. AI that recognizes emotions and changes content or its behavior based on this can help various service sectors, starting with simple call centers. There are also ideas to combine such AI, reading information from EEG of the brain and other non-external indicators with the same virtual reality to create the most comfortable and relaxing conditions, for example, for rest and relaxation.

So, many see emotion-trained AI as more effective for almost any field. The question is how accurate and appropriate such programs can be.

What technologies are used to teach AI emotions

Emotional AI analyzes the many ways people express emotions and uses this data to formulate responses and reactions that mimic empathy. This is not just an analysis of facial expressions or tone of voice, they can use EEG data, eye tracking technology, heart rate variability, galvanic skin responses, etc.

On the other hand, various algorithms and models are used, such as the support vector method, neural networks, and deep learning, in particular deep convolutional neural networks (CNN). These models are trained on large amounts of data and can accurately predict emotional states. Here are the individual technologies on which comprehensive emotion recognition is based.

Facial Expression Recognition (FER). Such technologies allow determining and classifying changes in facial expressions by analyzing facial images to assess a person's emotional state. Convolutional neural networks are the most effective in these tasks. It has been found that they successfully recognize emotions ranging from neutral to frightening or joyful.

Facial Microexpression Recognition (FMiR). This technology focuses on short-term and minor changes in facial expressions that occur, for example, if a person tries to hide their emotions. High-speed cameras and image processing methods are used to capture these fast and barely noticeable facial movements.

Dynamic Facial Expression Recognition (DFER). This is a technology that allows analyzing changes in facial expressions over time. It not only analyzes static facial data but also removes unnecessary frames during dynamic expressions. In addition, DFER takes into account contextual relationships between intra-pair and inter-pair frames, as well as static and dynamic aspects of facial expressions.

Complex Expression Generation Methods (CEG). These technologies are actively developing thanks to the application of deep learning methods and the use of large language models. Generative adversarial networks and variational autoencoders recognize and generate dynamic emotions and microexpressions. Large language models, such as SORA, combining visual and textual data, can generate facial expressions closely related to words.

Emotion Recognition Technology by Sound (AER). It has significantly improved the accuracy of emotion analysis through the integration of fuzzy logic. This technology determines the user's emotional state and responds to it, enhancing the smoothness and naturalness of human-computer interaction. Mixed emotion models for speech transformation:

Enhance the naturalness and realism of emotion recognition;

Allow simulating complex reactions in scenarios without direct human involvement;

Improve security by recognizing emotional features to detect deepfakes.

Emotion Recognition in Text (TER). To develop the technology, user behavior on social networks is analyzed. A multimodal sentiment analysis model based on interactive attention mechanisms can combine text, audio, and video data to improve recognition accuracy.

Application of Emotion Recognition Based on Acoustic Speech Characteristics. Acoustic modeling, deep learning, and natural language processing technologies are used to achieve accurate emotion recognition and generation. A new method combining deep convolutional neural networks and acoustic features has achieved more than 93% accuracy in emotion recognition.

Body Language Reading and Gesture Emotion Recognition. New skeletal recognition systems, such as SAGN, use advanced graph network structures and can qualitatively analyze human skeletal movements.

Virtual Reality Technology (VR). It has become an effective tool for eliciting and detecting emotions, especially in combination with neurophysiological methods such as EEG. Research has shown that virtual reality can effectively evoke emotions by simulating immersive scenarios while accurately tracking the user's emotional state through physiological feedback mechanisms.

Are there any practical developments?

Еще в 2017 году были такие способы применить эмоциональный ИИ:

Affectiva. Распознавание эмоций для игры в жанре психологического триллера, которое используется для подстраивания сложности игры под эмоции игрока. Чем сильнее игрок испытывает страх, тем больше препятствий, а совладание с эмоциями, наоборот, упрощает прохождение;

Другой пример из игровой индустрии — распознавание выражения лиц для анимирования лица персонажей под эмоции игроков в игре Star Citizen от Faceware Technologies;

Технология стартапа NuraLogix адаптирует рекламу под эмоции того, кто её смотрит. В 2017 году их разработка уже тестировалась в ряде канадских универмагов.

Есть и много других примеров.

Cogito. Этот ИИ призван помочь сотрудникам сопереживать расстроенным звонящим и повысить производительность.

FaceReader. Разработан компанией из Нидерландов, оценивает эмоции по ряду характеристик. Сейчас он может даже распознавать нейтральное состояние и анализировать презрение, подходит для фото и видео в реальном времени. Система адаптируется к тому, кем является исследуемый — ребенком, взрослым или пожилым человеком. Сервис использует уже более 1000 университетов, предприятий и учреждений. Для анализа создается точная искусственная модель лица с применением почти 500 ключевых точек, после чего она классифицируется через сравнение с известными данными.

Больше медицинское, но также способное определять стресс и другие в том числе эмоциональные состояния решение есть у NuraLogix. Их программа просто по фотографии:

Extracts data on blood flow in the face. This information is sent to the cloud for processing;

Signal processing methods and deep learning models are used to predict physiological and psychological effects and states.

What problems remain

Despite significant progress in facial expression recognition technologies, the accuracy of this process remains an issue due to blurred expressions and low-quality images.

In addition, cultural differences are a serious barrier. Differences in mood expression across cultures can lead algorithms to misinterpret or misclassify emotions.

Black box and inability to explain

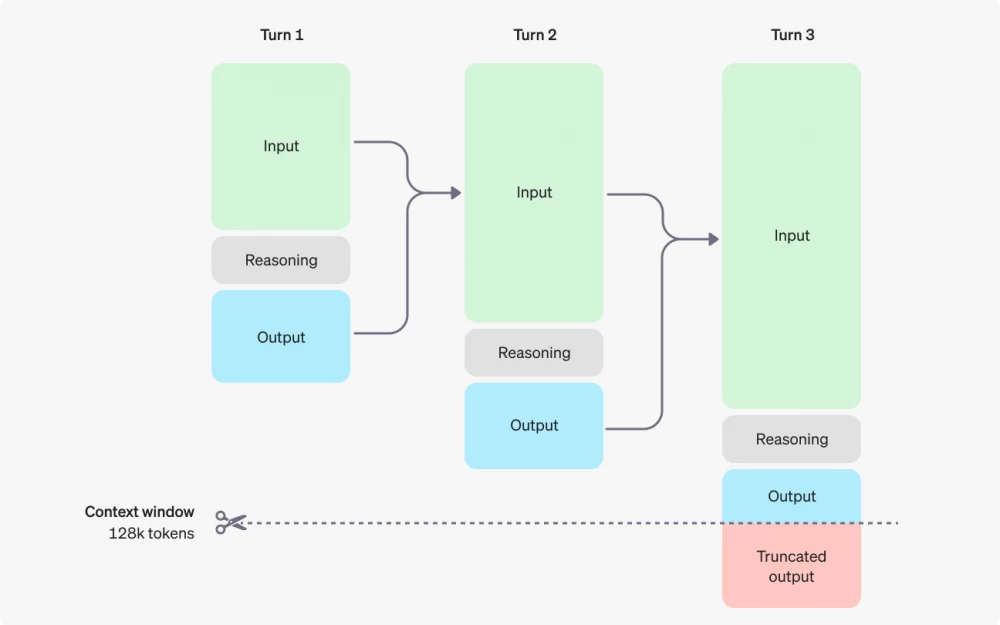

As early as 2018, almost every artificial intelligence suffered from one problem that has not been fully resolved to this day. AI works on the basis of knowledge, but does not replenish it in the process of its operation. That is, every day it accumulates huge knowledge bases, but does not use them.

The other side of the problem is the so-called "black box". AI learns on its own, it is opaque in this regard, and therefore its creators could not explain why the system came to this or that conclusion. Creating a machine capable of explaining this on its own also turned out to be not so easy. When it comes to emotions, especially their generation rather than reading, the ability to explain decisions can be critically important.

Therefore, the concept of "Explainable AI" has gained popularity in recent years. This should help build trust between people and AI systems. When people understand why an AI system made a particular decision, they are more likely to accept it and use it in their daily lives.

Ability to make mistakes

Explaining one's decisions is not the only human ability that AI can also adopt. It is important to remember the ability to make mistakes. When the chatbot Eugene Goostman passed the Turing test in 2014, it was aided by built-in deception.

Eugene imitated a 13-year-old boy for whom English was a second language. This meant that his mistakes in syntax and grammar, as well as insufficient knowledge, were perceived as naivety and immaturity, rather than as deficiencies in natural language understanding. That is, the AI did not make mistakes by itself, it was designed that way from the beginning.

How AI changes our perception of ourselves

With the development of artificial intelligence technologies, people are increasingly thinking about how they differ from machines. It is believed that the emergence of artificial intelligence triggers a new identity crisis in society and slightly changes our ideas about what makes a person a person.

In one study, several experiments were conducted. In one of them, about 800 people participated, who were divided into two groups. Half of the participants read an article about the revolution in artificial intelligence, and the other half read an article about the outstanding properties of trees. Then the participants were asked to evaluate how important 20 different qualities are for a person.

Participants who read the article about artificial intelligence considered qualities such as uniqueness, morality, and the ability to communicate more significant than those who read the article about trees. In another experiment, the subjects were simply told that artificial intelligence continues to develop. The results were similar to the previous ones - every time AI achievements were mentioned, the participants noted an increase in the importance of human qualities.

Qualities common with AI | Unique qualities |

Performing calculations Using language Implementing rules Predicting the future Logic Communication Facial recognition Memory Sensing temperature Sound recognition | Having culture Holding beliefs Having a sense of humor Being moral Being spiritual Having desires Feeling happy Feeling love Having individuality Forming relationships |

These qualities were proposed to the subjects for evaluation and were subsequently divided into those common with artificial intelligence and those unique to humans.

Can robots be better than humans at empathy

So, AI can be taught to mimic emotions. At the same time, people themselves in the modern digital world are becoming worse at recognizing them. Against this background, the question arises whether AI can help us recognize the feelings of others and experience our own, and among experts, a whole discussion has unfolded about this discussion.

In the context of digital communication, when people increasingly communicate online, problems arise with creating truly empathetic relationships. The lack of physical presence next to others makes it difficult to understand their feelings and emotions.

This is where artificial intelligence can come to the rescue. Teaching AI to mimic empathy is more than possible. Artificial intelligence and machine learning systems are very good at finding patterns in data. If we provide AI with many examples of empathetic text, AI will be able to identify patterns and signals that evoke or demonstrate empathy.

AI can analyze and evaluate characteristics such as tone and emotions in speech. This can help the person receiving the messages better understand what was meant and helps them "speak" by showing how messages can be interpreted.

However, this approach is also compared to "using a crutch for walking," which "can lead to muscle atrophy." The question arises whether a dependent worker will be able to perform their job effectively if the AI system stops working.

Moreover, one of the possible drawbacks of using AI to teach empathy is that people may become more attached to robots than to other people. A machine cannot make a choice contrary to its program. A person, on the other hand, can be compassionate one day and ruthless the next. That is, AI can make mistakes less often than a person even in such an area as empathy.

Results

In 2016, the market for emotion detection and recognition systems was valued at $6.72 billion. At that time, it was predicted that by 2021 it would be estimated at from $20 to $40 billion. In practice, in 2022, the market volume reached $19.5 billion, and forecasts for 2026 predict growth to $58.3 billion. There are already many startups in this area, and large corporations are ready to buy them for hundreds of millions of dollars.

The biggest breakthrough is expected when all methods of emotion analysis can be used in combination and AI is taught to adapt to the personal, cultural, and age characteristics of clients.

Write comment