- AI

- A

The trend towards AI has brought microchip manufacturing back to the forefront of computer technology

Author of The Economist magazine Shailesh Chitnis discusses in the Technology Quarterly special issue how increased attention to artificial intelligence is changing the microchip manufacturing market, leading to the disruption of "Moore's Law".

Technological challenges are more important than political ones, claims Shailesh Chitnis.

Disclaimer 1: this is a free translation of Shailesh Chitnis's column, which he wrote for The Economist magazine. The translation was prepared by the editorial staff of "Technocracy". To not miss the announcement of new materials, subscribe to "Voice of Technocracy" — we regularly talk about news about AI, LLM, and RAG, as well as share useful must-reads and current events.

You can discuss the pilot or ask a question about LLM here.

Disclaimer 2: the text mentions the company Meta, which is recognized as an extremist organization in the territory of the Russian Federation

A hundred years ago, at 391 San Antonio Road, Mountain View, California, there was a warehouse for packing apricots. Today, it is just one of many low-rise office buildings on busy streets, home to Silicon Valley startups and future billionaires. However, in front of the building stand three large and strange sculptures — two-legged and three-legged forms resembling water towers. These are giant versions of diodes and transistors, components of an electronic circuit. In 1956, the Shockley Semiconductor Laboratory, a startup dedicated to the idea of creating such components entirely from silicon, opened on this site. This is how the history of Silicon Valley began.

The company founded by William Shockley, one of the inventors of the transistor, failed to achieve commercial success. However, silicon developments did not. In 1957, eight of Shockley's employees, whom he called "traitors," left and founded Fairchild Semiconductor less than two kilometers from the laboratory. Among them were Gordon Moore and Robert Noyce, future co-founders of the microchip giant Intel, and Eugene Kleiner, co-founder of Kleiner Perkins — a pioneering venture company. Most of the well-known Silicon Valley technology companies can trace their roots, directly or indirectly, to these first Fairchild employees.

Before the advent of semiconductor components, computers were room-sized machines that used fragile and unstable tubes. Semiconductors, solid materials in which the flow of electric current can be controlled, offered components that were more reliable, versatile, and compact. When such components began to be made primarily from silicon, it became possible to place many of them on a single piece of this material. Miniature transistors, diodes, and other elements on silicon "chips" could be combined into "integrated circuits" designed to store or process data.

In 1965, while still working at Fairchild, Moore noticed that the number of transistors that could be placed on an integrated circuit at a certain cost doubled every year (he later adjusted this period to two years). His observation, known as "Moore's Law," proved to be extremely significant. Chips produced in 1971 had 200 transistors per square millimeter. In 2023, the MI300 processor, created by the American company AMD, contained 150 million transistors on the same area. The smaller the transistors became, the faster they could turn on and off. The MI300 components are thousands of times faster than their predecessors from 50 years ago.

All significant breakthroughs in computing — from personal computers and the internet to smartphones and artificial intelligence (AI) — can be linked to the reduction in transistor sizes, their acceleration, and cost reduction. The development of transistor technology has been the driving force behind technological progress.

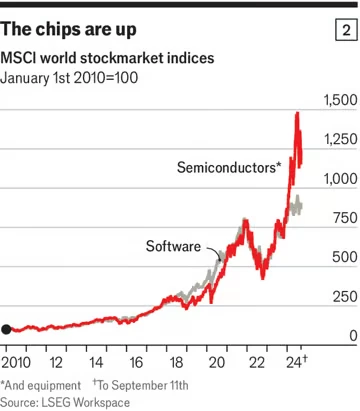

For some time, the technological significance of silicon chips was reflected in the importance of the companies that produced them. In the 1970s, IBM, which produced both chips and computers and software for them, was an unrivaled giant. In the 1980s, Microsoft proved that a company selling only software could be even more successful. But Intel, which produced the chips on which Microsoft's software ran, was also a huge force. Before the dot-com crisis in 2000, Intel was the sixth largest company in the world by market capitalization.

After the crisis, "Web 2.0" services offered by companies like Google and Meta came to the forefront, and the semiconductors on which their platforms were built began to be perceived as commodities. To explain this growth dynamic of the large IT sector, venture capitalist Marc Andreessen stated in 2011 that "software, not silicon, has taken over the world."

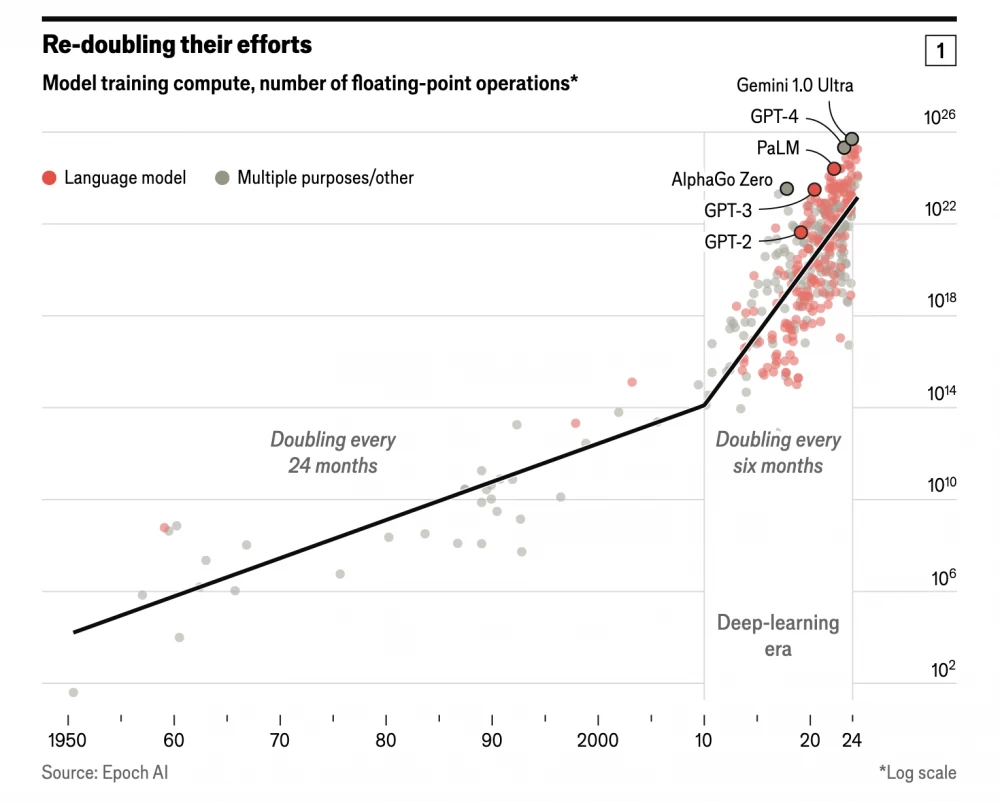

But the AI boom has changed the situation. The development of AI requires colossal computing power. Until 2010, the amount of computation needed to train leading AI systems increased according to Moore's law, doubling every 20 months. But now this figure doubles every six months. This means that the demand for more powerful chips is constantly growing. Nvidia, an American company specializing in chips ideally suited for working with large language models (LLM) that dominate AI, is now the third most valuable company in the world.

Since AI has once again made chip manufacturing a trend, companies striving for success in this field are starting to develop their own microchips. The reason is not only in training models but also in their subsequent use (so-called "inference"). Using LLM requires significant computing resources, and this process needs to be performed billions of times a day. Since specialized circuits can perform such tasks more efficiently than general-purpose chips, some companies working with LLM prefer to design their own microchips. Apple, Amazon, Microsoft, and Meta have already invested in creating their AI chips. Processors developed by Google and used in data centers are more than any other company except Nvidia and Intel. Seven out of the ten most valuable companies in the world are now engaged in chip manufacturing.

The technological complexity of a chip mainly depends on the size of its elements; currently, advanced technology is measured by parameters of less than 7 nm. It is at this level that key processes for AI occur. However, more than 90% of the semiconductor industry's production capacity works with elements sized 7nm and larger. These chips are less technologically complex but more widespread - they are used in TVs, refrigerators, cars, and machines.

In 2021, at the peak of the COVID-19 pandemic, an acute shortage of such chips led to production disruptions in various industries, including electronics and automotive manufacturing. The industry, striving for efficiency, globalized: chip design in America; equipment for their production — in Europe and Japan; factories where this equipment is used — in Taiwan and South Korea; chip packaging and assembly into devices — in China and Malaysia. When the pandemic disrupted these supply chains, governments took notice.

In August 2022, the US government proposed a package of subsidies and tax incentives totaling 50 billion dollars to bring chip production back to the States. Other regions followed suit: the European Union, Japan, and South Korea promise nearly 94 billion dollars in subsidies. The situation was complicated by US attempts to restrict China's access to advanced chips and the technologies used to create them through export bans. China responded to these bans by restricting the export of two materials vital for chip production.

But the main problems of chip manufacturers lie not in industrial policy or national rivalries, but in technology. For five decades, reducing transistor sizes has improved chip performance without increasing power consumption. Now, as the number of transistors per unit area increases and AI models become more voluminous, power consumption is skyrocketing. To sustain exponential performance growth, new ideas are needed. Some of them, such as closer integration of hardware and software, are gradual. Others are more radical: rethinking the use of silicon or abandoning digital data processing in favor of other methods. In the following publications, we will talk about how such achievements can support the work of the exponential engine.

Write comment