According to the tag ai, the following results have been found:

Hello everyone! Nikolay Edomsky here, Head of the Network Engineering Group at EDINOM TSUPIS.

💻 Using Nginx for load balancing LLM chat sessions. There are many examples of connecting LLM models to a Telegram bot, but with a large number of users, there are no guides on distributing the load between processes — all tutorials suggest a monolith with a single replica. This article explains how to balance the load of a bot for thousands of users, including after connecting the model context protocol for integrations

This article is a translation of a blog post by Luciano Nooijen published on April 1, 2025

Good night to everyone :)

Holidays, public holidays, any "extra" days off - this is an opportunity for attackers to try to launch an attack. The attackers' calculation is simple: the fewer "defending" specialists are at their workplaces, the higher the chances of breaking into the perimeter, establishing themselves, and causing damage. It was the same last year: at the beginning of May 2024, we were approached for help in investigating two serious cybersecurity incidents that occurred during the May holidays. Attackers targeted and destroyed the virtual infrastructure of large organizations and temporarily paralyzed their business operations. The normal weekend was ruined not only for our on-duty experts but also for representatives of the affected organizations, who had to trade nature and barbecue for servers and logs.

Today we’ll talk about a topic that sparks lively interest among many developers and AI enthusiasts — integrating large language models like DeepSeek or ChatGPT with your own knowledge base.

I explain in detail with examples of creating infographics, editing interiors, prototyping websites, and advertising concepts, all done using simple text prompts.

Companies are increasingly turning to AI to transform their business processes. However, successful AI transformation requires not just automating individual tasks, but a fundamental rethinking of the processes themselves—a kind of “moving from improving saddles to creating automobiles.” This article examines two aspects of such transformation.

This article discusses AI integration packages from Effect—a set of tools designed to simplify working with large language models in modern applications. It describes in detail how you can use universal services to develop AI functionality without being tied to a specific provider, which reduces the amount of "glue code" and lowers technical debt.

We at MWS have launched a language model aggregator, where you can work with multiple LLMs through a single interface. In MWS GPT, the following models are available: MTS's own models, external models like DeepSeek, or the customer's own models. These models can easily be connected to any corporate system or chatbot via API.

Don’t let your thoughts become training material for AI — or leak in a data breach. It doesn’t matter which chatbot you choose: the more you share, the more useful it becomes.

Seryozha died on Wednesday. His avatar was activated on Friday.

The creation of machine learning algorithms pursues many goals. One of them is to understand how our brain works, an amazing supercomputer that learns on the fly, predicts future scenarios, and manages countless processes simultaneously.

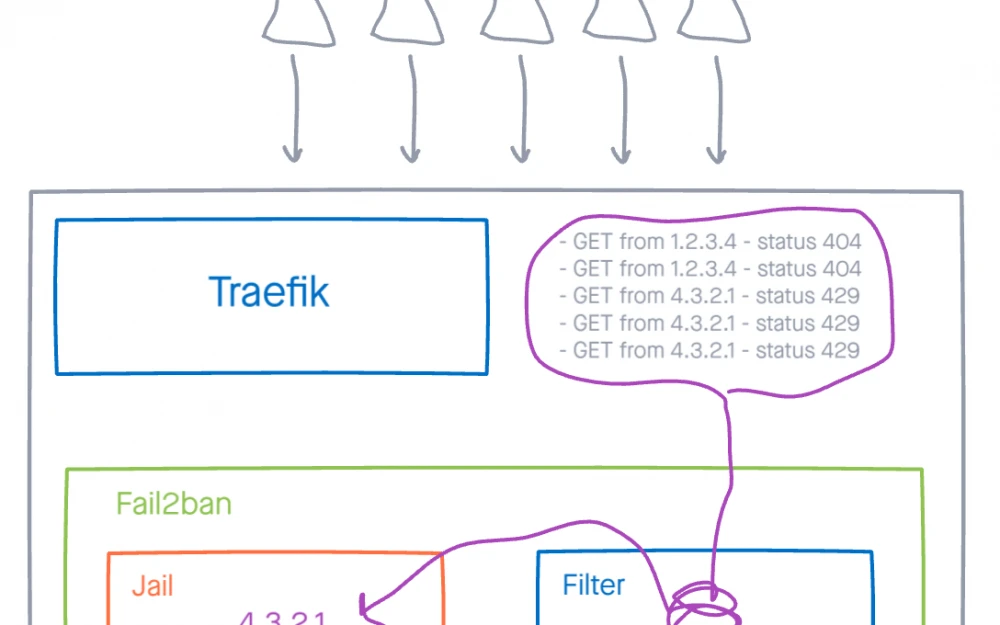

On the access nodes where our clients create tunnels, we currently use Traefik for HTTP traffic termination. Recently, we encountered a problem where one of the domains was receiving thousands of requests per second, causing the web server to reach 100% CPU, affecting all client traffic on the node in that region, with users reporting freezes and timeouts. This is the story of how we implemented fail2ban to block DDoS floods using access logs.

Agents are super buggy. In our company projects, we noticed that Langchain started to perform worse. In multi-agent systems, agents often loop because they don’t understand when they have completed the final action, don’t call each other when needed, or simply return data in broken JSON format. In short, creating an agent system has become more difficult, and we even started considering simplifying systems by getting rid of a lot of agents. And just a week ago, OpenAI updated the SDK for creating agents and also rolled out access to new tools via API. So I went ahead and started testing.

Getting to OFFZONE as a speaker is not the easiest task. Every year we get questions: how does the CFP work? which topics are better to choose? how to submit an application correctly? what will speakers get?

AI assistants in programming have burst into developers' daily lives at incredible speed. But what lies behind the convenience? Acceleration or superficial thinking?